- Jensen (Jinghao) Zhou 1,2,*

- Hang Gao 1,3,*

- Vikram Voleti 1

- Aaryaman Vasishta 1

- Chun-Han Yao 1

- Mark Boss 1

- Philip Torr 2

- Christian Rupprecht 2

- Varun Jampani 1

Hi there,

We are excited to present Stable Virtual Camera (SEVA) , a generalist diffusion model for Novel View Synthesis (NVS). Given any number of input views and their cameras, it generates novel views of a scene at any target camera of interest.

The highlight of this model is that, when all cameras form a

trajectory, the generated views are 3D-consistent, temporally

smooth, and—true to its name—"Stable", delivering a seamless

trajectory video.

It requires no explicit 3D, generalizes well in the wild, and

is versatile to different NVS tasks.

We are naming this model in tribute to the

Virtual Camera

cinematography technology, a pre-visualization technique to

simulate real-world camera movements. As such, we hope Stable

Virtual Camera can serve as a creative tool for the general

community.

Best,

Authors of Stable Virtual Camera

Gallery

Single Input View

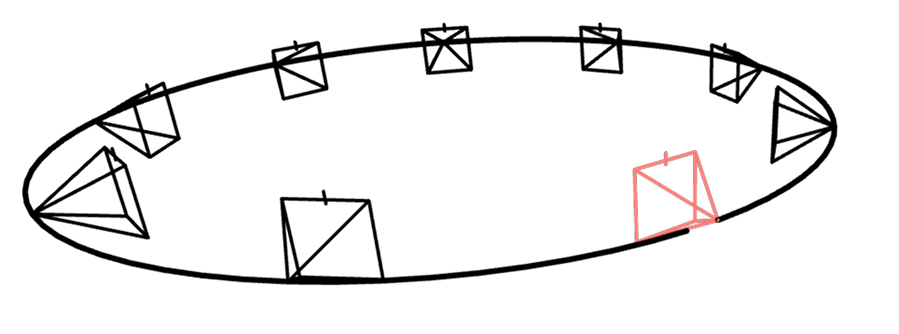

Stable Virtual Camera can generate high-fidelity novel views from a single image. Here, we show a few examples with simple camera trajectories.

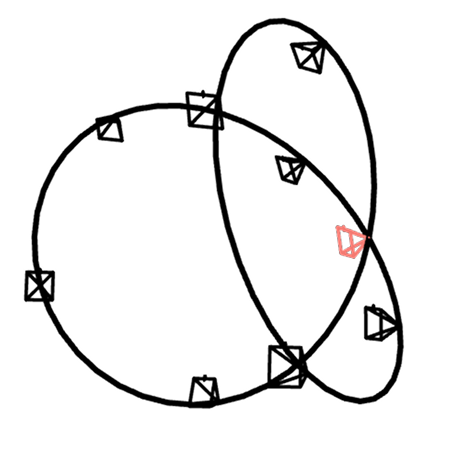

Free Camera Trajectory

Stable Virtual Camera can generate videos following any user-specified camera trajectories. Results below are mainly obtained through our gradio demo where users can perform keyframe-based trajectory editing.

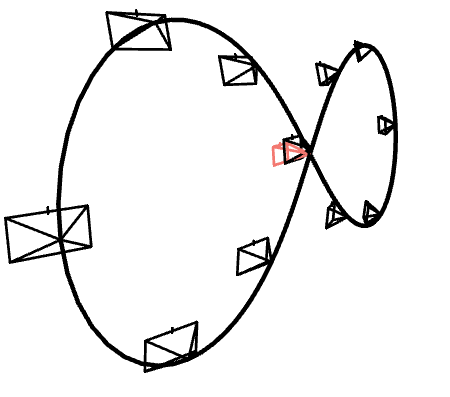

Flexible Number of Input Views

Stable Virtual Camera can theoretically take any number of input view(s).

In our experiments, we test from 1 to 32 input views and find that the performance improves with more views, especially when flying through a large scene. The capability of handling semi-dense views, say 32, is an interesting generalization of our approach, which has not been shown in diffusion-based view synthesis before. We recommend you to pause the video and slide around to see the difference.

Flexible Image Resolution

Our method is capable of generating views of different aspect-ratios, remarkably, in a zero-shot manner, despite being trained exclusively on \(576 \times 576\) square images. In the results below, we take the same pair of views as input, and sweep over different resolutions for target views.

Long Video with Loop Closure

Stable Virtual Camera can generate long videos by rolling out samples. We test this capability by generating videos up to 1000 frames. Historically, loop closure measures the 3D consistency of estimates when the camera returns to the same location after a long trip. While trivial for NeRFs, diffusion models lack persistent representation and struggle with such problem. Our approach shows a promising step forward in this direction.

Here we show both a baseline and our method's results. Note the building in front of and the bush behind the telephone booth. Our method maintains consistency when revisiting the same viewpoint, while the baseline shows noticeable differences. Please refer to our paper for more details.

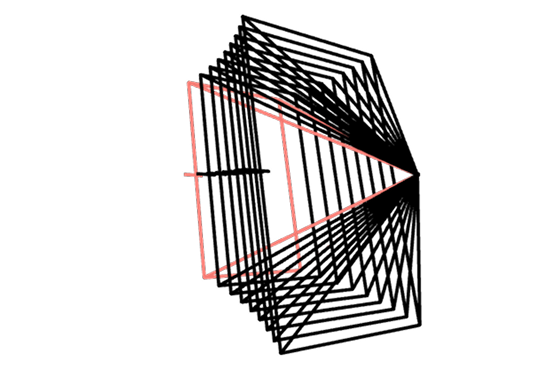

Sampling Diversity

Given sparse input views, novel view synthesis is ambiguous. Stable Virtual Camera captures such ambiguity from which we can sample differedt possible scenes.

Benchmark

We estabilish a comprehensive benchmark that evaluates NVS methods across different datasets and settings. Stable Virtual Camera sets new state-of-the-art results. We believe this effort will nurture the academic community. Please refer to paper for more details.

Comparison with Existing Models

We qualitatively compare Stable Virtual Camera with ViewCrafter, an open-source video diffusion model, and our reproduction of CAT3D, a proprietary multi-view diffusion model. We consider two tasks: large-viewpoint NVS which emphasizes on generation capacity, and small-viewpoint NVS which emphasizes on temporal smoothness.

ViewCrafter doesn't support this task natively: it assumes start-end interpolation and a fixed number of frames to be generated. We therefore chunk the task into multiple of 25 frames with nearest-neighbor start-end input frame association.

Limitations

Like any method, Stable Virtual Camera has its limitations. Here we show two typical failure modes.

Useful Links

- CAT3D: Create Anything in 3D with Multi-View Diffusion Models, which inspired us to explore diffusion models for view synthesis.

- LVSM: A Large View Synthesis Model with Minimal 3D Inductive Bias, which is a great work for feed-forward view synthesis modeling.

- History-Guided Video Diffusion, which also shows impressive results on long video generation.

- DUSt3R: Geometric 3D Vision Made Easy, which we used as pose estimator for multiple images in the wild.